Acceleration is all you need (now): Techniques powering OctoStack's 10x performance boost

In this article

Approaches to optimize your GenAI model inferences

Effective use of the NVIDIA H100 FP8 precision

Optimal tensor parallelism with the new NVIDIA CustomAllReduce kernel

Efficient GPU compute utilization with CUDA graphs

Faster output with Speculative decoding

Improved GPU utilization with Dynamic SplitFuse

OctoStack delivers exponential improvements with scale

In this article

Approaches to optimize your GenAI model inferences

Effective use of the NVIDIA H100 FP8 precision

Optimal tensor parallelism with the new NVIDIA CustomAllReduce kernel

Efficient GPU compute utilization with CUDA graphs

Faster output with Speculative decoding

Improved GPU utilization with Dynamic SplitFuse

OctoStack delivers exponential improvements with scale

GenAI apps are changing the world. We’ve seen through our customers how new apps are simplifying how users get new information, interact with services, and unlock creative potential. Even as organizations explore the newest models and latest capabilities, they are acutely aware of the resource impact of the success of these applications. Builders want efficiency, customizability and reliability, as they build for this growing demand. And underpinning all of these is the need to optimize inferences, a problem space that OctoAI has been focused on since its founding.

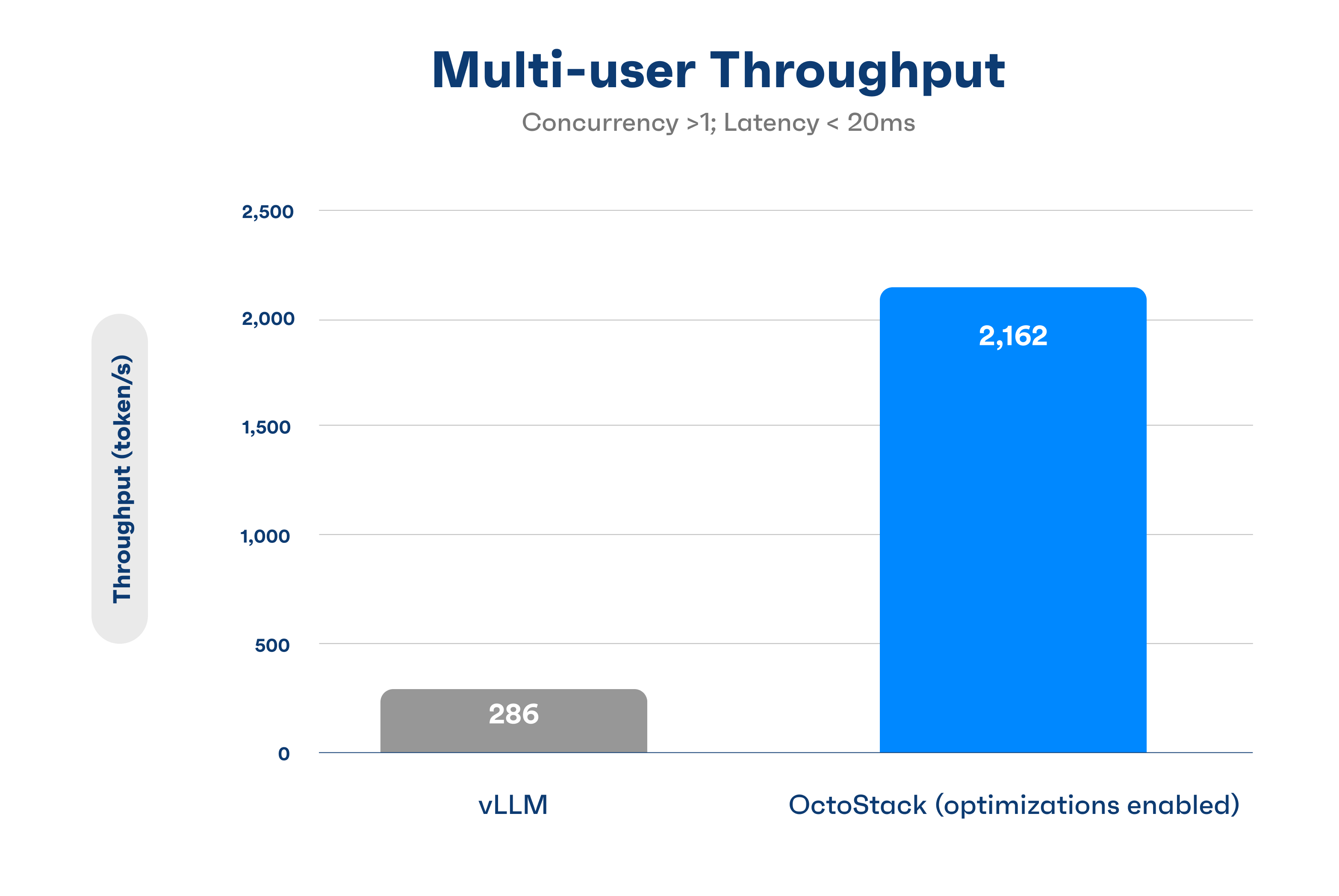

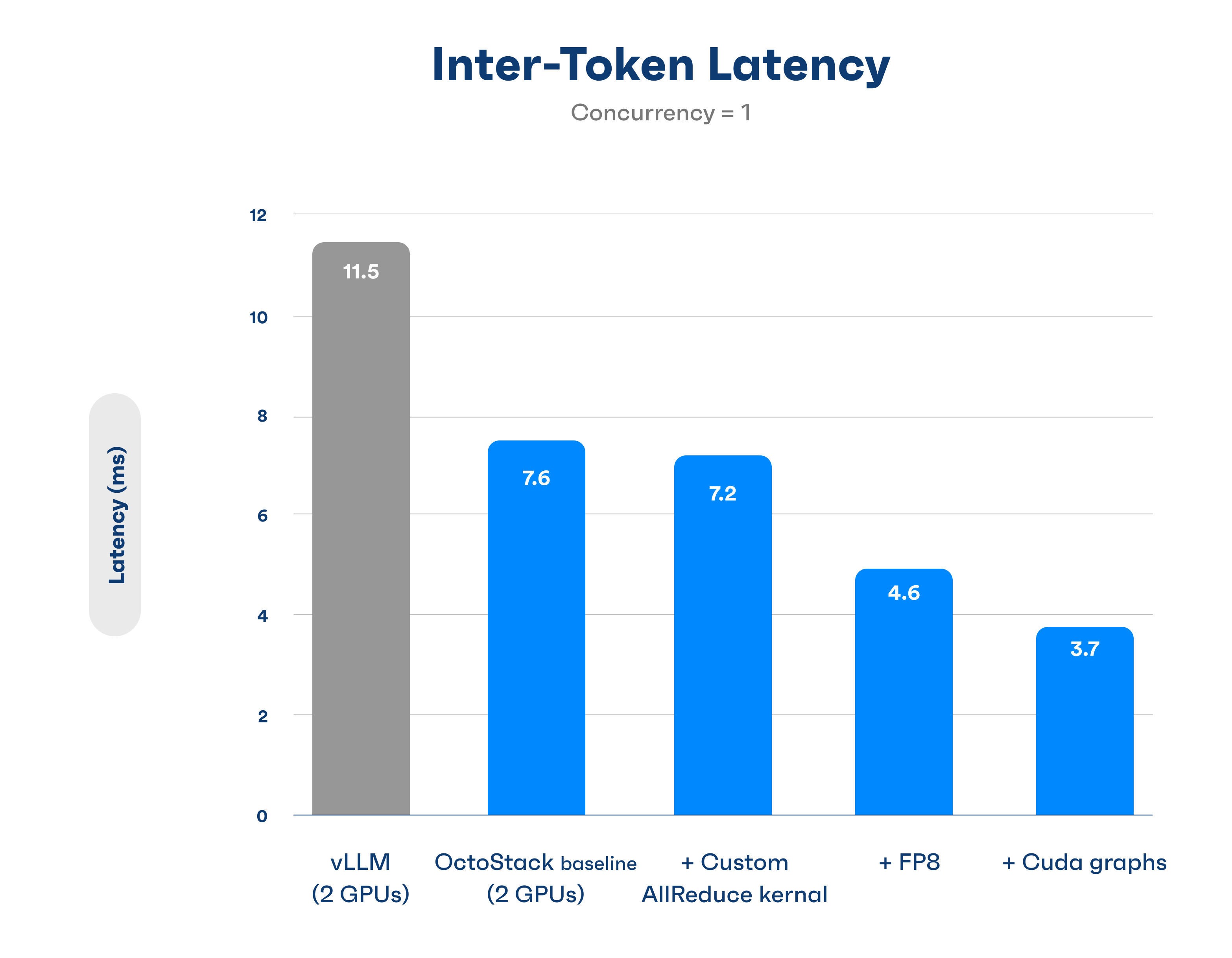

Building on our years of experience across the inference stack, we have built a number of leading edge optimization technologies into the OctoAI systems stack. With OctoStack – our turnkey GenAI serving stack offering for the enterprise environment – these are available as levers or knobs that builders can choose to turn on and off for their deployment. The chart below captures results from preliminary benchmarking of a few optimizations we have added to the OctoAI systems stack in the last few months. Our preliminary benchmarking shows 4x to 5x improvements in low concurrency use cases, and orders of magnitude improvement for higher concurrency use cases.

Throughput improvements: OctoStack evaluations with single user configurations

Latency improvements from recently added optimizations

These are optional configurations available for OctoStack today. If you think OctoStack may be a fit for your organization’s needs, reach out to our team for an assessment.

The rest of this article is a brief overview of these optimization techniques, and new technologies that we are investigating and in the process of incorporating into our stack in the coming months.

Approaches to optimize your GenAI model inferences

GenAI models, and large language models (LLMs) in particular, are among the most resource-intensive workloads in the industry today. Running inferences requires extensive coordination and orchestration across data storage, memory, and compute caches, all dealing with tens of gigabytes of data and billions of coordinated computations. Techniques to improve these span the entire GenAI serving stack, from the model, to the serving layer, to the computational kernel and techniques used. The following is a discussion of six such techniques. These are not in any particular order.

Effective use of the NVIDIA H100 FP8 precision

GenAI models have larger memory requirements than typical data workloads. As an example, a database may have a minimum requirement of 1 to 2 GB of memory. In contrast, even small LLMs like Meta’s recent Llama 3 8B model requires nearly 20GB of memory. For such models, the primary factor determining resource requirements (ie - which GPUs and how many) is often the memory requirement, especially for small volumes of inferences where the compute usage cannot be fully utilized alongside the memory.

One approach to reduce the memory footprint is quantization, reducing the space needed to capture each number involved in the model’s operation, like the model’s weights - which are usually in the 16 bit FP16 format. Moving from a 16 bit floating point (FP16) representation to an 8 bit integer representation (like INT8) results in halving the memory needed for weights. But post training quantization from FP16 to INT8 results in a steep drop in quality. Part of the reason is that the training of the original model is accomplished with floating point numbers and floating point weights, and the quantization to a non floating point representation loses much of the fidelity achieved in the original training. This degradation takes away the key benefits of moving to newer and larger models trained with bigger datasets. Because of this, quantization to integer representation has not been broadly adopted for quality sensitive production use cases yet.

Another approach to quantization is the new FP8 format. FP8 is an 8 bit floating point representation, introduced collaboratively by NVIDIA, Arm and Intel. It provides a higher dynamic range than integer based quantization schemes, making it suitable for quantizing more components of an LLM (in particular activations and gradients). This also enables better alignment between the numbers used in inference computations and in training, even in post-training quantization. FP8 is also natively supported in the new NVIDIA H100 GPUs, enabling better computational efficiencies through native hardware support.

While hardware support and floating point representation have benefits, effective use of FP8 to unlock its quality-performance capabilities requires correct implementation at multiple levels in the inference process. This needs to be done on a model by model basis, and at multiple components like the weights, the activation functions and the KV cache. This also requires the right choice of FP8 representation for each of these, based on the model and value ranges to be converted. Examples of this include selection of the two FP8 formats available on H100 hardware, e4m3 and e5m2, choice of per tensor or per channel scaling, the selection and configuration of calibration and smoothing procedures and the downstream performance implications of all of these choices. All of this requires deep expertise with the inference operations and the impact of these changes. Incorrect assumptions and implementation at any of these can result in limited performance improvement and unpredictable quality impact.

When correctly implemented, FP8 can have performance improvements with near zero quality degradation. NVIDIA’s published results report that BERT model inferences with FP8 (post training quantization) have 99.9% the accuracy of FP16. This is also validated by internal testing at OctoAI. In tests with the Mixtral 8x7B model, we observed that inferences served by the FP8 quantized version delivered LongBench benchmark results within 0.5% of the FP16 results (713 versus 716), and a semantic match consistency of 94.7% between FP16 and FP8 results (evaluated using internal GTE-Large embeddings based semantic evaluation framework). With these, FP8 brings to most use cases the quality strengths of larger new models, while reducing the resource needs.

Optimal tensor parallelism with the new NVIDIA CustomAllReduce kernel

Computing language model outputs on a GPU is done by executing a sequence of CUDA kernels. These kernels execute the mathematical operation on the GPU. Traditionally, machine learning inference commonly leverages a single GPU per deployment replica for ease of deployment and minimizing complexity. With the growth in model sizes, effective model execution today must span multiple GPUs, and requires coordination in operation across these GPUs. When sharing data across GPUs, most developers will and should first use NVIDIA’s Collective Communications Library (NCCL) to simplify inter-GPU communications in an effective way on NVIDIA GPUs. The OctoAI team continuously evaluates performance and tradeoffs across kernel options. We have seen that the new CustomAllReduce kernel, released as part of the NVIDIA TRT LLM project and building on the commonly used operations in the NCCL library, results in the best performance for computations for forward passes in language models. We have now added the ability to use this new kernel with the OctoAI stack, resulting in faster inference execution and lower inter token latency with no quality tradeoffs.

Tensor parallelism is the particular form of GPU compute parallelism we use to distribute each computation across multiple GPUs, in order for each GPU to only depend on a subset of the weights (this is in contrast to data parallelism, another parallelism strategy where the same weights are replicated across multiple GPUs but the input data is segmented to be run in parallel across these replicas). Implementing tensor parallelism effectively includes both the selection of the optimal parallelization strategy (or number of GPUs) for the desired use case and expected volume as well as the right selection of compute and communication kernels. As an example, OctoAI’s benchmarking has shown that going from 2 to 4 parallel GPUs is particularly valuable for high concurrency use cases - which are the defacto when it comes to SaaS or managed services deployments while also balancing the communication overhead and serving flexibility with even more GPUs per serving replica. At moderate concurrency levels of 50 or more, we were able to observe a cumulative improvement of more than 5x compared to alternative best in class DIY implementations on the same GPU footprint. The impact of this parallelism is not as apparent at smaller concurrency levels. When configuring your OctoStack deployment, we work with customers to choose the right tensor parallelism level based on anticipated usage and to tune this as they learn more about traffic and utilization in pilots and production usage.

Efficient GPU compute utilization with CUDA graphs

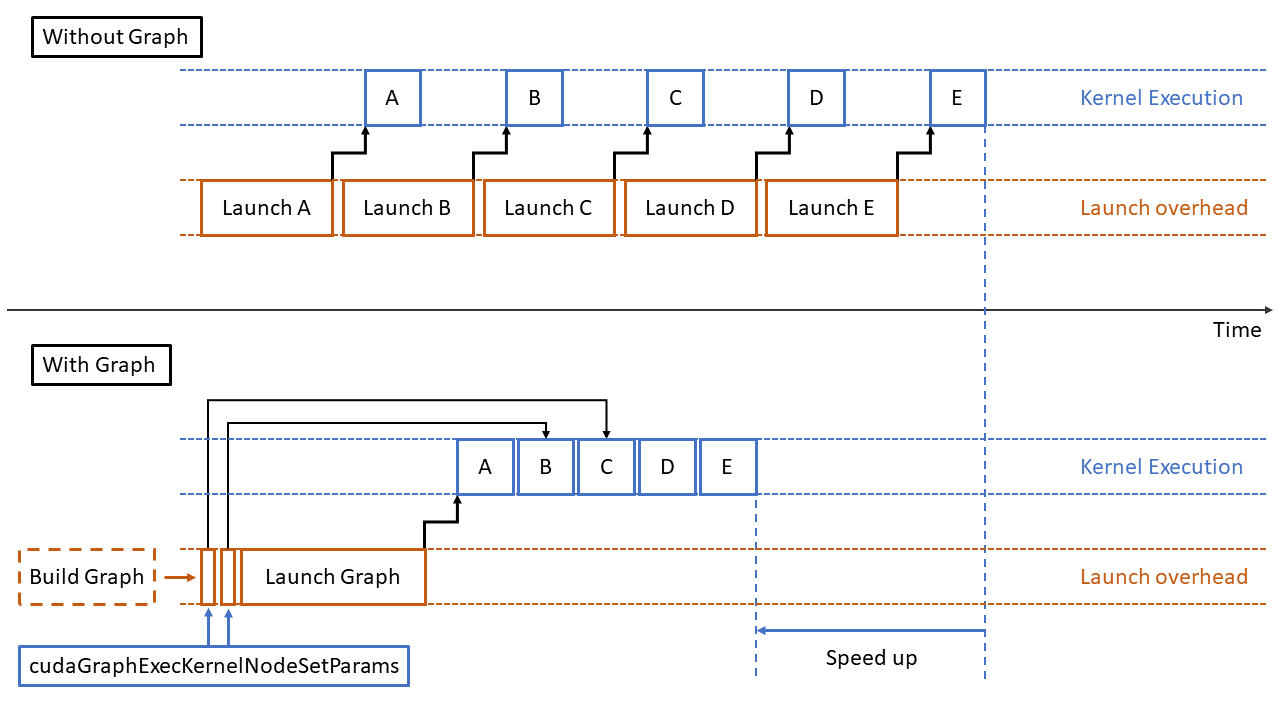

As discussed in the previous segment, computations in the GPUs are executed within kernels. These GPU kernels are invoked by the driving server process on the CPU. Each execution includes CPU and GPU dispatch overhead. These take a finite amount of time depending on the kernel and launch parameters, in the order of microseconds. With the growing speed of GPU compute and the increasing amount of data - this overhead time can grow to become significant for certain models and parallelism strategies, especially at higher tensor parallelism sharding amounts and smaller models. As a result, sequential execution of individual GPU kernels can become increasingly inefficient due to this overhead leading to wasted GPU cycles.

CUDA graphs were introduced by NVIDIA to address this issue. With CUDA graphs, instead of launching individual GPU operations, the CUDA kernels are sequenced and grouped together as a dependency tree (or graph) and sent for execution in one go. This results in an initially higher overhead for the CPU operation, but cumulatively speeds up the execution as the graphic from NVIDIA below illustrates. OctoAI inference stack now implements CUDA graphs enabled scheduling to take advantage of these efficiencies.

Source: https://developer.nvidia.com/blog/constructing-cuda-graphs-with-dynamic-parameters/

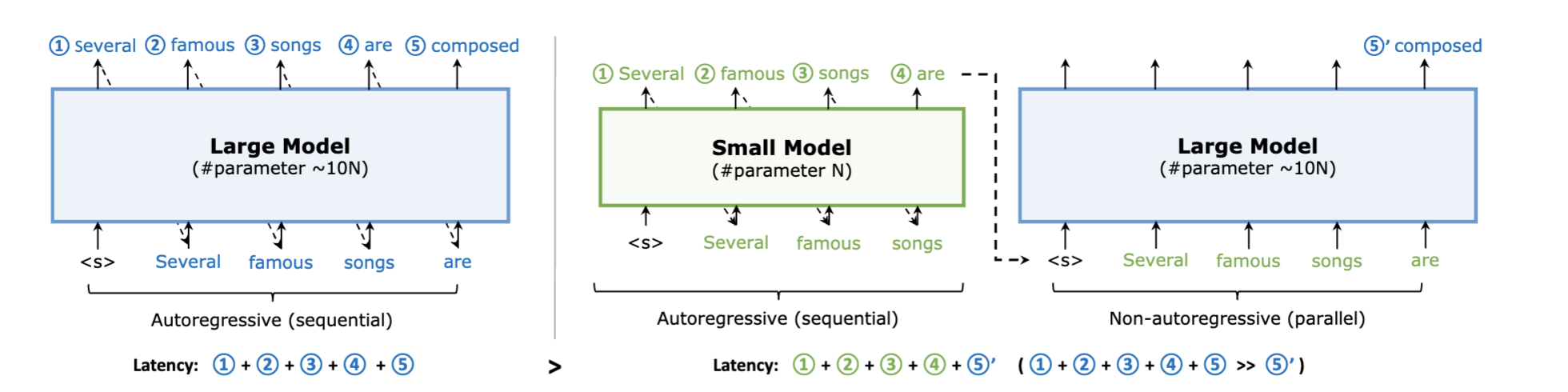

Faster output with Speculative decoding

Text generation in LLMs is an iterative process of generating the best next token (numeric representations of words or groups of words) to a given sequence of input tokens. The input prompt word sequence is converted to a sequence of tokens by the tokenizer and fed into the model. The model processes the input token sequence, creates a list of output token options and probabilities, and selects the best next token from the group based on the inference parameters. This token is then appended to the input prompt, and fed back into the model to generate the next token. This process is repeated until the generation is complete.

Source: https://www.semanticscholar.org/reader/b7d12aec8a0152ec4921dfa43ab525a63b334385

The parallel execution results in a validation of whether the initially generated draft tokens are correct or not, for the input prompt. Once an incorrect token is detected, the generation can start from that position using the sequential token by token process - but this results in time savings corresponding to the token creations until that position N. The researchers behind the papers in this subfield built on an observation that many text generation steps are much easier than others, and can be generated much more easily by simpler models and techniques. For the same reason that you can likely complete the last word of this phrase: “take your toothbrush and brush your ____”. This is how the draft tokens can be created with a high probability of being correct without needing the full compute from the main LLM.

More recent advances, in particular the EAGLE approach, builds on this with further simplifications to generate the draft tokens from the same model - using an additional layer (plugin) added to the model to do its own speculation and create the draft tokens needed for the remainder of the process. This overcomes the limitations arising due to the difference in parameter counts and the capabilities when using a smaller draft model, resulting in overall higher accuracy in the initial draft tokens and larger savings in overall generation. OctoAI has an internal preview implementation of speculative decoding using this approach, and we are validating this with preliminary quality and speed benchmarks.

Improved GPU utilization with Dynamic SplitFuse

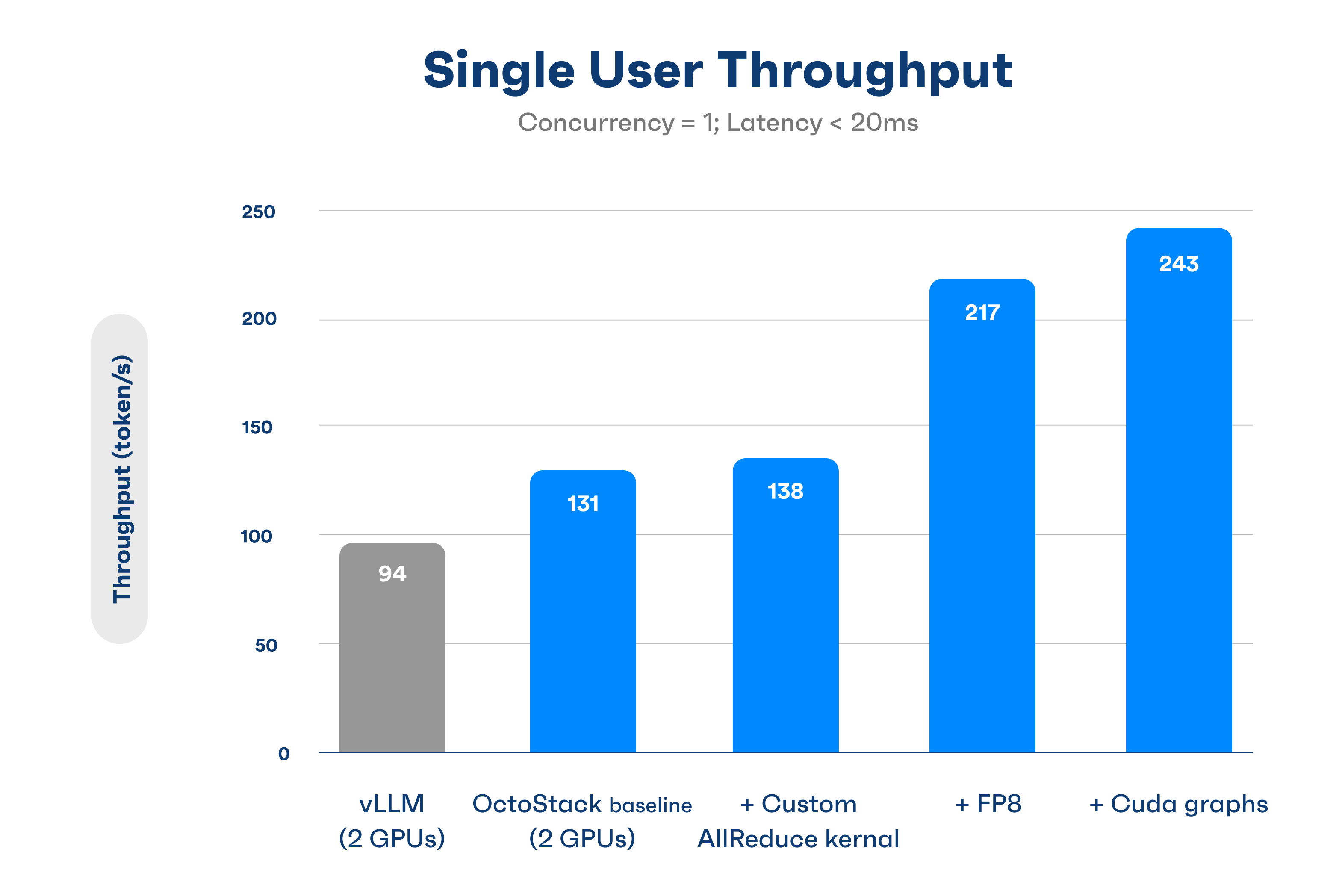

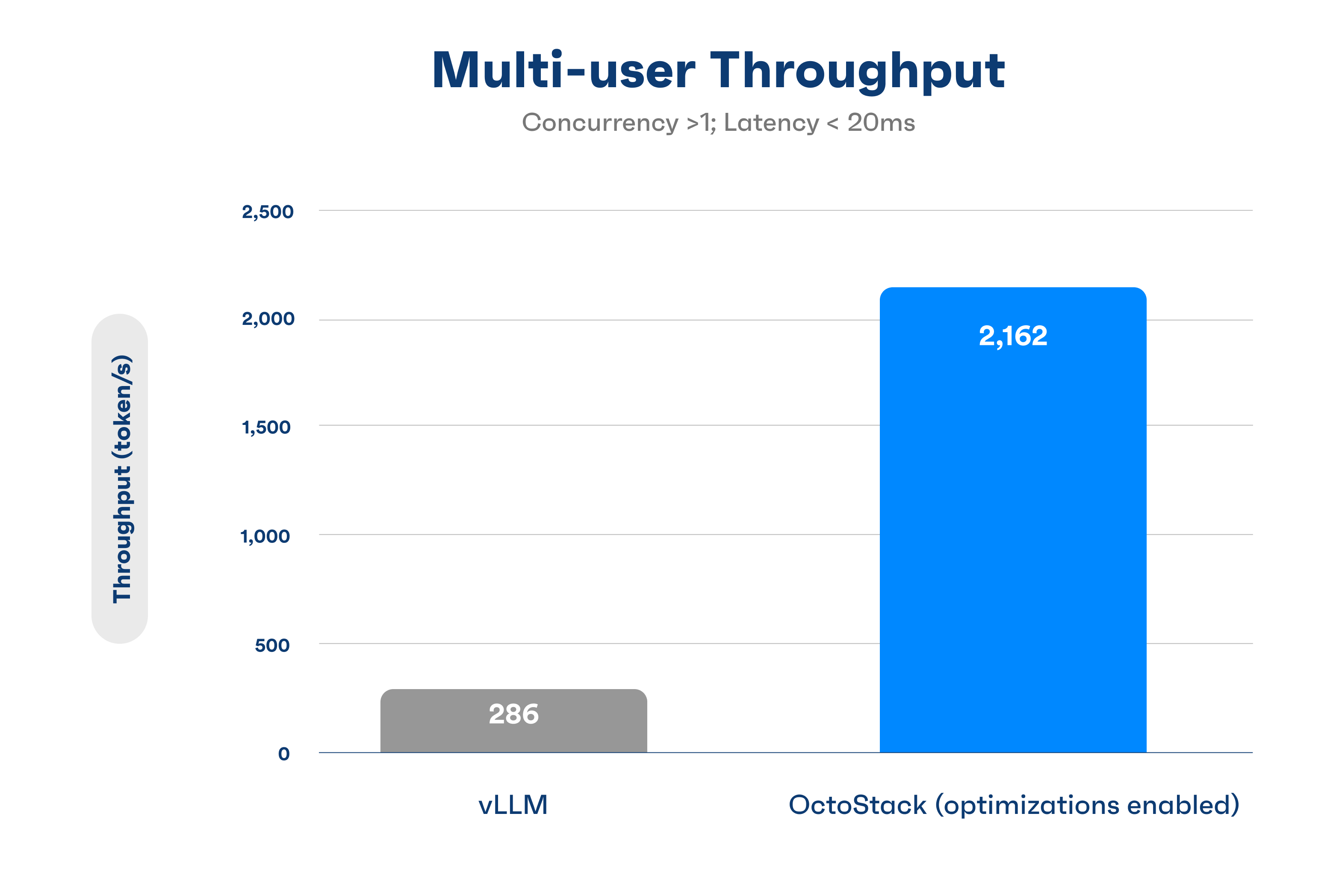

OctoStack delivers exponential improvements with scale

Core to OctoStack is the MLC compilation and serving layer, based on the MLC LLM project — created by a group of leading researchers in the AI community including one the OctoAI co-founders and other Octonauts. The MLC architecture is designed to deliver efficiencies across a broad range of traffic and deployment patterns, and these benefits get exponentially higher at higher levels of concurrency and scale (typical to model GenAI application usage patterns).

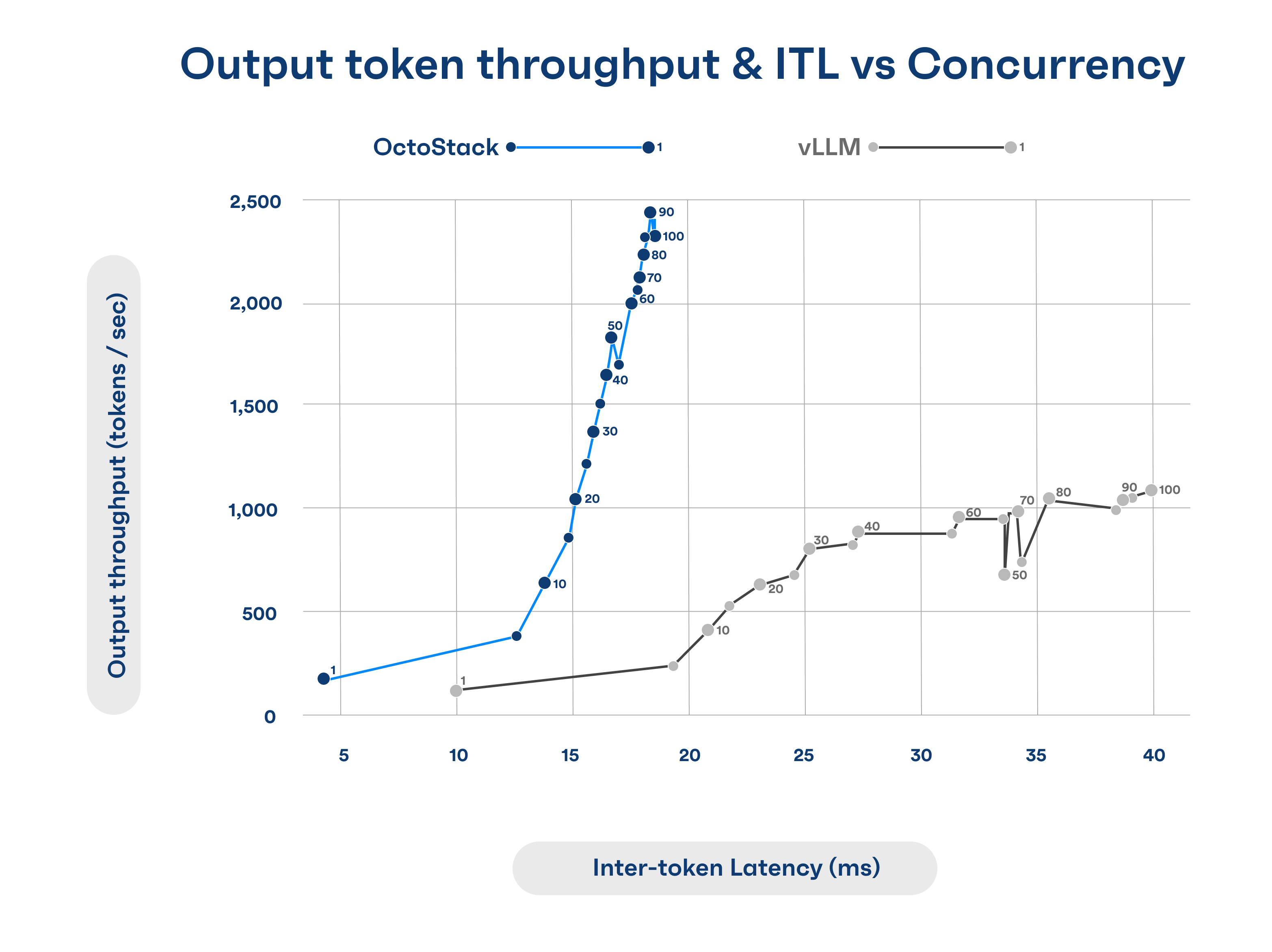

The chart here captures the results from recent internal benchmarking comparing OctoStack performance against vLLM (version 0.3.3: 82091b864af105dbe373353655dc9d8c0a6ba66f) at different concurrency levels. Larger scale deployments with tens to hundreds of concurrent users can see an order of magnitude improvement in speed and utilization, of 10x or more, much beyond the 4x to 5x improvements in smaller deployments. And as the plot shows, OctoStack customers have tremendous economies of scale benefits as they increase usage (ability to increase throughput and concurrency with only a marginal change latency). These scale benefits are complementary to the techniques discussed through this article, and we anticipate it will continue to push the efficiency improvements delivered with OctoStack.

Source: Benchmarking of OctoStack and vLLM at multiple levels of concurrent users (load test concurrency labeled on plot), 1024 input tokens, 128 output tokens, 3 sigma deviation